In this episode of The Mobile Gamedev Playbook, we’re looking at the use of AI moderation in social gaming.

GameRefinery analyst and podcast regular Kalle Heikkinen joins host Jon Jordan to kick off the podcast with all things social, including the emergence of social hangout areas and the rise of social features in mobile games.

While social features create endless opportunities for games to succeed (look at Roblox!), there is also a toxic content dilemma that comes with gamers being able to communicate with one another freely. CEO and Co-Founder of Utopia Analytics, Dr Mari-Sanna Paukkeri, brings her expertise to discuss AI moderation, toxicity in gaming and the intricacies behind moderating language, images and voice.

You’ll find the latest episode on YouTube and all major podcast platforms.

Watch the episode on YouTube:

Or listen to it through BuzzSprout:

We’re available on all major podcast platforms – if you enjoy the episode, remember to hit subscribe!

Topics we will cover in this episode:

- Social elements in mobile games

- What does Utopia Analytics do?

- Toxicity and moderation in games

- How does AI moderation actually work?

Introduction

Jon Jordan: Hello, and welcome to the Mobile GameDev Playbook. Thanks for tuning in for another episode. This podcast is about what makes a great mobile game, what is and isn’t working for mobile game developers, and all the latest trends. I’m Jon Jordan, and in this episode, we’re going to focus on social gaming and the use of AI in mobile games. Joining us as our expert in that field is Dr Mari-Sanna Paukkeri, the co-founder and CEO of Utopian Analytics. She has the first PhD in the world for language-independent text analytics, which I don’t even know what that means. How are you doing, Mari-Sanna?

Mari-Sanna Paukkeri: Very good. Thank you. We can talk about what it means later.

Jon: This is what we’re going to talk about. This is what we’re going to learn about and how it relates to mobile games and games in general. Also joining us, we have Kalle Heikkinen, who’s a senior analyst at GameRefinery by Vungle. How’s it going, Kalle?

Kalle Heikkinen: Very good. Thanks for asking, Jon. How about you?

Jon: Yes, yes. Do you like my new background? We did it especially. I think it’s really good.

Kalle: I should have that one too. For sure.

Jon: You should demand it. Exactly. Good. In the podcast today, we’re going to be looking at, I suppose to– We’re going to start looking at some of the trends around some social gaming in mobile. Then we will look at some of the new technologies around AI machine learning and how that can help game developers make sure that they are keeping their environment safe and legal. Let’s kick off with the social stuff. We’ve always had social features in games, but what’s been going on, particularly in mobile social games? Kalle, do you want to lead us off on that?

Social elements in mobile games

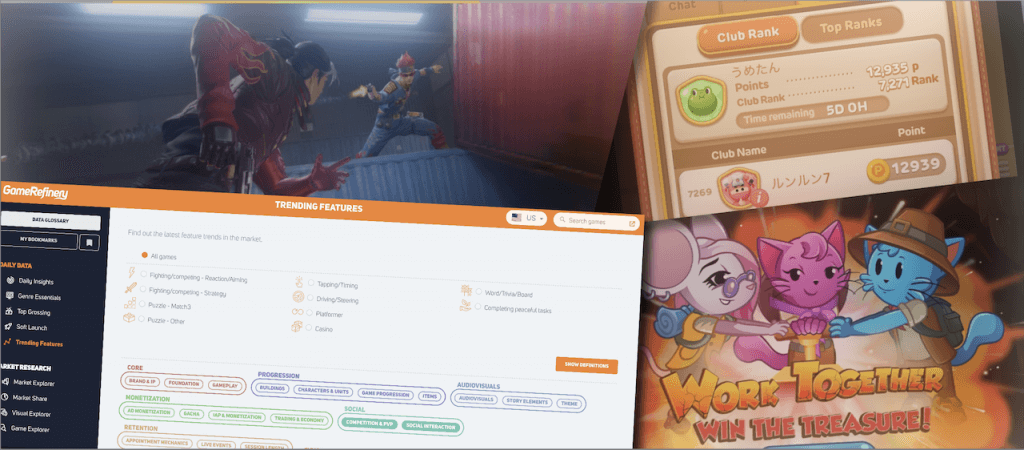

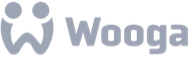

Kalle: Yes, thank you, Jon. Social elements and social features are definitely something that it’s becoming a bigger and bigger part of the engagement strategy for strategies for mobile games. There are mainly three things that I would point out when it comes to trends in this space. The first one is the rise of social features, especially in casual games. Traditionally, we have seen social mechanics being associated with, especially with mid-core games; for example, they did quick guild systems that we’ve seen in genres like 4X strategy. Then, on the other hand, casual games have traditionally been more focused on the core action layers, and the social interaction aspects have been relatively light. You’ve had features like sending lives to other players and stuff like that. We now see that more casual games are adding social features and different kinds of spaces where people can interact with each other. I guess it’s partly because people and players are looking for social interaction and a sense of digital togetherness. That has been one catalyst for the surge in popularity of social gaming and playing together with other people.

“More casual games are adding social features where people can interact with each other because people and players are looking for social interaction and a sense of digital togetherness.”

Kalle on social features in casual games

While the competitive aspects might not be that important for casual gamers, they still enjoy these competitive elements in games, especially when they do it as a part of a team. Guild feature is something that we primarily see trending in the casual scene, so the utilisation of that feature among top 100 grossing games in match-three puzzles, puzzle games have increased 30% during the last 12 months. Currently, more than 60% of the match-three games in the 100 grossing have guild systems. That’s one thing.

The other thing I would mention is the emergence of these features we call social hangout spaces. What we mean by those, these separate modes in games unrelated to the actual core gameplay, so they often include different kinds of minigames and various ways to communicate with each other by using voice chat or text chat, and the point is that you use your avatar character in these modes and then engage with these activities. There can be things like photoshoots, where you take photos with each other and all different kinds of ways to interact socially.

“More than 60% of the match-three games in the [US ] top 100 grossing have guild systems”

Kalle on guild systems in match-three games

We can think of games like Roblox or Fortnite’s party Island in Western games, like feeding this moat. For example, in China, they can be found in loads of different kinds of games. The GameRefinery data that we have says that 29% of the Chinese top 200 games have this feature, and I firmly believe that partly this has been boosted by the COVID thing and people finding different ways to interact with each other digitally.

The last thing I would mention is how we see social elements combined with monetisation elements, and a couple of examples just to throw out here is the global battle passes that we see in games like Mobile Legends: Bang Bang and PUBG Mobile. The idea is that you have a battle pass plan, and then you can form a team inside these battle passes, and then the more members you can invite to these plans, the better the rewards are for everyone. Another example is this communal progressive IAP events where everyone, for example, in a particular server or a certain guild, can receive rewards if they all communally, cooperatively spend enough premium currency or real money. That’s another example.

Those three. To recap, combining social with monetisation, the emergence of social hangout areas, and the rise of social features in casual games.

Jon: Yes, it’s just quite a good point you made there, that these features have, to a degree, all been around for, even in mobile, for a decade. They came about in these very highly engaged, highly monetised 4X games. I guess they were looking at the MMOs, where they took their design prompts from. I remember back in the days of Game of War, you had these intense guilds, alliances and that was very much how they drove their monetisation, wasn’t it?

I think it was indeed the first game I saw where you bought things in a game. Your guild members also got stuff, so you can see you’re almost obviously encouraging everyone to spend in the– Even if you weren’t part of the alliance, it was better to be part of an alliance because you got more stuff. Then once you’re in the alliance, you want to stay there. It’s interesting how that stuff floats down in different ways, from the hardcore to the mid-core. I was surprised at that stat. Let’s just check I got it right, so you’re saying 60% of the top 100 match-three games have this sort of feature?

Kalle: Exactly. One year ago, it was still 30%, so it has doubled, practically doubled.

Jon: Good. I guess that’s the background for what we’re going to be talking about in more depth, that casual games– I’ve always felt casual games is the wrong term, isn’t it? I guess it’s sort of the case. These are not casual experiences now when people spend lots of hours, lots of time, lots of money, and building social networks within those. Someone needs to come up with a better term for that.

Anyway, that’s where we are with mobile, and this is how companies like Utopian Analytics can bring their smarts into the game. Mari-Sanna, do you want to start at the top and talk a bit about what Utopia Analytics does?

What does Utopia Analytics do?

Mari-Sanna: Utopia is a text analytics company. We do any kind of text analysis, and it works for any language in the world, so basically, any language or dialect can be analysed in the same way. This is unique in the text analytics tools. Usually, you can analyse English and then some other languages, and that’s all, but we can do any, even minor dialect.

Jon: With my journalist hat on, when someone says any, then immediately you think, “Well, there must be something you can’t do.” I’m just checking that you’re saying any language with a text form to it; you can use that.

Mari-Sanna: It has to be in some kind of text form, but it doesn’t need the Latin alphabet. It can be any character used, but there need to be characters to read it.

Jon: Does that mean then that all languages have the same– I’m going to get the wrong term here, but all languages must have the same structure because I guess you’re using AI to analyse how language hangs together in some clever way.

Mari-Sanna: Languages, as such, don’t have the same structure. The structures of the languages differ a lot from language to language. What is similar between all languages is that we, humans, as kids, learn the languages from our parents and other people around us, and we start to learn and use the language and begin to be native speakers. That is the requirement for a language. It needs to be a fluent language, spoken by people and written, of course, because we analyse text mainly.

Jon: In terms of, obviously, you’ve done your PhD, then I’m sure a lot of academic work backing this stuff up, but how did you move from that academic understanding to commercializing a product, because usually there’s lots of clever ideas in academia and lots of companies are started, and they don’t quite do that commercialization thing. That’s probably the hardest bit.

Mari-Sanna: I know what you are talking about. I’m not an academic person myself. I’m very practical. When working at the university as a researcher, I noticed that I’m one of the most practical people. Now, I’ve found the place where I can use my academic background, all the things and skills learned from there, but then doing something that works in real life.

Our product, Utopia AI moderator, is moderating any language, as we do any language, for example, in-game chats. That’s something; it’s very practical. It’s something that affects many people around the world. I find it very interesting to do something that happens in real life and helps people.

Toxicity and moderation in games

Jon: Obviously, we focus on games because games are the best thing in the world, obviously, but that’s not the way everything works. I guess your product can be used across sorts of different industries. How did you end up– I don’t know what percentage of your business is in games. I don’t know if you would even tell us that, but were you surprised that games companies would be interested in this sort of stuff?

Mari-Sanna: We started moderating news comments, and that’s a bit slower communication than what’s happening in games. We went quickly to online marketplaces, and we have been moderating all kinds of user-generated content. This gaming industry is relatively new for us. What is different in the gaming industry is mainly the significant volumes. That is the biggest difference. The volumes are huge and something that we find in the gaming industry, especially, of course, somewhere else sometimes also, but the toxicity is quite high in games, which is unfortunate. I think especially with that, AI would be needed to help in moderation because the volumes are large. You can’t use the traditional way, so manual moderation helps to save the people’s communities.

“What is different in the gaming industry is mainly the significant volumes. The volumes are huge and something that we find in the gaming industry, especially, of course, somewhere else sometimes also, but the toxicity is quite high in games, which is unfortunate”

Mari-Sanna on the difference between moderation in gaming opposed to news or online marketplaces

Jon: I guess you’re probably not from the technical view. Would there be any reason why you think that toxicity is higher in games? Maybe because they’re seen as more playful spaces. I guess if you go to a, I don’t know, a big newspaper and start writing rude things on their comment system, then that’s seen as that’s not the place to do that.

Whereas maybe the problem with games is that games are seen as not being very serious or not taken very seriously necessarily, and probably have a younger age range, then there are fewer rules. People feel like it’s a more open space. I don’t know if that’s a thing you can comment on.

Mari-Sanna: Frankly, I don’t know what the reason is, but there may be a few reasons. One of them is that the editors want to make sure that their content on their platform is okay in newspapers. There’s a tradition to pre-moderate all content. In gaming, there’s a tradition not to moderate anything.

People come in the first time you go in, and you start learning how to play, and then the other people behave however they do because there’s no one saying what to do. It’s only recently that moderation is more than using spam filters. It is very easy to find a new way to say upsetting things to other people in natural languages. That’s one of the pain points for the traditional moderation tools. They can’t understand those things, and then you can say those if moderation was available on the platform.

“In gaming, there’s a tradition not to moderate anything. It’s only recently that moderation is more than using spam filters.”

Mari-Sanna on traditional moderation in gaming

How does AI moderation actually work?

Jon: Okay. I’m going to capture this question carefully, for non-experts like us who probably do think AI moderation is just looking at rude words and filtering them out, which you just said it’s not. How does it actually work? Because obviously, the human language is a very flexible thing, and slang words are being invented all the time. I guess, in some cases, as stuff gets moderated, then obviously, people still come up with new rude ways of saying things. Without getting too technical, how does it actually work?

Mari-Sanna: I’ll try to not be too technical. The basic thing is that humans need to define what kind of content to accept and what to decline. If human moderators wouldn’t understand the message, we can’t train the AI to understand the message, so we need very skilled humans to say that this message is accepted in this game and other messages are not. There are different kind of AIs; all AI products are like different apps, and you need to remember that if one app works in this way, the other one is different, but the AIs we are working on with, Utopia, they learn from these human decisions, and that’s their only source of information, what the AI needs to know.

“The basic thing is that humans need to define what kind of content to accept and what to decline. If human moderators wouldn’t understand the message, we can’t train the AI to understand the message.”

Mari-Sanna on how moderation works

We built a separate AI model for each game, so basically, each game has its own rules and policies. I think it is quite a democratic way to do it, so each game defines its own, what community there is. The AI is trained with human moderating decisions, and then the AI learns to mimic human behavior into moderation. Then it’s very quick, so it can be used to moderate content before it is published to the other players, so basically, they wouldn’t ever see the bad content when the humans have defined that these kinds of things are bad and not acceptable here. With our AI, it also can understand any sort of slang. It depends on whether the humans understood the slang early, and if they did, then the AI learns to do the same; as you said, the languages change and evolve. Our AI also learns all the time; we need humans to define, make some moderation decisions now and then to have some new data for the AI to learn, and in this way, we learn all the new things coming to the games and in the discussions, and start to be able to moderate that as well automatically.

“We built a separate AI model for each game, so basically, each game has its own rules and policies. I think it is quite a democratic way to do it, so each game defines its own, what community there is.”

Mari-Sanna on how they moderate different games

Jon: That initial training process, how long does that take, or how would that play out if someone was looking to integrate?

Mari-Sanna: We are quick in that, so we promise that model to be product and quality ready in two weeks, so any language you’re able to get it in two weeks, so this is quite great compared to many other AI tools you may have learned.

Jon: How does that break down on a language basis? Do you have to train it per-language basis or I guess some languages are kind of broadly similar?

Mari-Sanna: It depends on the case. There are many reasons why different languages should be modelled separately, but sometimes they are combined. For example, if all the users use different languages mixed, and there’s no way to separate them besides using language recognition tools, so basically, we take the language separately, if it is possible. For example, if the different languages are on separate servers, that’s okay, but otherwise, they can be combined in one model. The quality of the AI model is a bit better if we have separate models for each language; it is just a mathematical fact.

Jon: Yes, I suppose so, but I suppose the nice thing about it is, as you say, the training process is you could have a very different level of language for a game that was being played by young teenagers compared to one that is, for whatever reason, an 18 plus game, so you do have that. You’re not just throwing a monolithic machine learning system at it without any way of changing what you are allowing because clearly, adults are going to talk in a different way than children.

Mari-Sanna: Yes, that’s true, and that’s why we think that how we build the AI models is very important because it only learns that language used in that game, and it adapts to the new versions of the languages or language overtime at that game, so it’s always different than in other games because the audience is slightly different everywhere.

Jon: One of the games you highlight, as a client, is Star Stable, which is a PC and now mobile horse-based MMO, so obviously does scale down quite low, kind of female-focused because it’s horses. I think it scales, like a lot of these games are from some preteens and upwards. Can you talk a bit about how it’s worked out for them as clients and how that process has changed over time?

Mari-Sanna: Yes, any kid can play this game, but it’s not for adults. It is played in Europe, in very many countries in Europe, and also in the US. There are over 15 languages used in the discussions. We have the Utopia AI Moderator model running for each of the languages. It pre-moderates each chat message between the kids to prevent them from bullying each other or revealing personally identifiable information about themselves to the other kids.

Also, grooming is something that much needs to be avoided in this kind of kids community. Star Stable has defined their moderation policy, what type of content they want to accept there and what they don’t want to accept, and then that is automated by the AI to learn from human decisions. This moderation is updated regularly. We at Utopia take care of the AI, and Star Stable then defines their moderation policy all the time what they– If they want to update it anyway.

“Grooming is something that much needs to be avoided in this kind of kids community. Star Stable has defined their moderation policy, what type of content they want to accept there and what they don’t want to accept, and then that is automated by the AI to learn from human decisions.”

Mari-Sanna on moderating Star Stable

Jon: That’s quite interesting then. It’s not just dealing with foul language; it’s also dealing with making a safe space because some children don’t understand when they’re giving away too much information. That’s actually a quite subtle different feature that you can bring.

Mari-Sanna: Yes, it is one example. There is this COPPA law in the US, and it is quite important to do this kind of moderation as well.

Jon: Do you see a bigger interest among games companies? Is this something now you have– now you’ve been doing it for quite a few years, is there something you think games companies still are a little bit unaware of and just a bit stuck with just spam filtering or word filtering?

Mari-Sanna: Yes, some games companies are very responsible with their audiences. They have been focused on the moderation tools and other tools, protecting their players from other players who don’t behave. But some games are not that focused, and I think that if we were to organise a big event in real life, we would have some kind of security people there. It’s in the laws in most of the country that you have that. In my opinion, in online games, you need to have the same kind of structures, that if there are very many people who can’t interact with each other, there should be some kind of security system that takes care of the users that they are not harming each other.

“I think that if we were to organise a big event in real life, we would have some kind of security people there. It’s in the laws in most of the country that you have that. In my opinion, in online games, you need to have the same kind of structure, that if there are very many people who can’t interact with each other, there should be some kind of security system that takes care of the users that they are not harming each other.”

Mari-Sanna on why games should be moderated

Jon: As you say, probably in different jurisdictions, there are various legal rules around safeguarding, certainly of minors. It’s an issue, I guess. Privacy, in general, is something that’s becoming more important. At the moment, you’re text-based; where does that sort of technology go? How quickly do you think, something like voice stuff, can you do that? Because obviously, that’s the next level up, much more complicated. Is that on the roadmap, or does that require sort of more technical breakthroughs?

Mari-Sanna: Yes, we can do it already now. Voice chats are a bit different from text chats in a way that those can’t be pre-moderated. If you don’t want to delay how people interact, you can’t touch the voice signal. What you can do is, like a bit a few seconds later, notice if something ugly or other kinds of inappropriate content was said. That is technically made by using the speech-to-text tool first and then giving that text format for Utopia AI moderator. As we know, speech-to-text tools are not always working perfectly. Some languages work very well, and others do not work that well.

For example, English works pretty well, but when there’s slang and this kind of very casual talking, it might be quite pro-context what you’ll get out of there. It would be very difficult for a human to read it and decide whether it’s improper or not, but our AI can read that as well. As long as the speech to text is consistent in its errors, our tool learns to understand what the content is.

It is the next step in moderation, to also moderate voice chat. For example, that would be used to give negative points to the users if they don’t behave. Then, for example, banning them from the game if they get too many points, so I suppose that would be a very effective way to make sure that the participants stay calm and pleasant to other people.

Jon: In general, on the timing point, how quick does the system work?

Mari-Sanna: We have never had any trouble in real life. It is very quick on our end to do the moderation part, and then there’s, of course, the network internet delay, but if it is like normal chat, you almost don’t notice delays of one second. It’s always below that.

Jon: I imagine making your server costs are quite expensive.

Mari-Sanna: Well, when you have a lot of traffic on the servers, yes.

Jon: Exactly.

Mari-Sanna: You would pay for that.

Jon: One of the interesting outcomes, especially running this kind of company, is you have to certainly spend a lot of time looking at server locations and optimising all your code for servers and stuff. Cool, good.

Kalle, what do you make of that? I don’t know if you’re an expert in this sort of thing. Do you think, at the moment, that games companies– I guess games companies do treat stuff like we’ve heard about privacy things and COPPA when that came in in the states. The high-level stuff, I think, big companies’ legal teams are told to deal with it, but I don’t hear a lot of talk about this sort of thing very much, which is I guess funny as we’re seeing more user-generated content, more casual stuff, as you said. Do you think people should be taking this a lot more seriously?

Kalle: Yes. I definitely do; it was fascinating to hear you guys discuss this. As you mentioned, user-generated content, and we have all these new games that are leveraging that and games solely built on the user-generated content like Roblox and stuff like that. Like I discussed before, all the different ways to socially interact inside games are just on the rise. A good example is a social hangout, where one of the major components of those modes is that you communicate via text and voice chat. Sometimes you even have these boards where you can draw stuff on and stuff like that.

Being able to moderate that content will be very essential for all the games, especially the games that have these kinds of modes and support—for instance, user-generated content. The games were– What I’ve played, the only visibility I have in this topic is that in some of the games that I have played, sometimes when you type something, and you sometimes just see that it’s been blocked or something like that. Obviously, I’m personally operating much in the Chinese games, so sometimes I just bump into all kinds of stuff like that.

Jon: Yes, there’s a lot of moderation. Can you do moderation of images at the moment? Can you build that into your system?

Mari-Sanna: Yes, we moderate images as well. It works in the same way as text moderation. We train a separate image model for each game separately, and depending on what kind of content they want to access and whatnot, we train the model.

Jon: Fascinating. I guess if you can get input into the machine learning model, then that’s it, then it deals with it, doesn’t it? The clever bit is that inflow into the model.

Mari-Sanna: That’s right.

Jon: Lovely. Thank you both very much for your expertise in talking about the trends and the specifics of what games companies should be doing to deal with things like moderation. Thanks very much to people who’ve been watching and listening, obviously as a podcast and as a video as well. Thanks for that. Thank you to Kalle.

Kalle: Thank you.

Jon: Thank you to Mari-Sanna.

Mari-Sanna: Thank you very much.

Jon: Remember, we are publishing a podcast of what’s going on in games, and there’s such a lot going in games now. What would have been seen as very technically advanced techniques are now commonplace, I think. As mobile games grow, we take up more gaming and technology space, and more things come in.

We managed to get through the entire episode without talking about the metaverse, so that was quite good. Usually, we have to have some of that stuff in there. Anyway, thanks for watching and listening. Please subscribe to the channel and come back next time to see what’s going on in the world of the Mobile GameDev Playbook.